ODDEEO

ODDEEO

“You can call me Miku.”

A larger than life pop star—almost 10 feet tall, in fact—is standing on stage at the Warfield in San Francisco, decked out in a mini-dress and thigh-high boots. That she pauses to announce her name feels almost unnecessary. Throughout the evening, the audience has been entranced by Miku, swinging their glow sticks—turquoise, to match her trademark twin blue ponytails—in time with the music, marvelling at her flawlessly executed choreography, and screaming wildly between each song.

The Warfield is just one stop on Miku’s sold-out North American tour, dubbed the Miku Expo, which also took her to Los Angeles, Chicago, New York, Toronto, and Mexico City. At each show, backed by Brooklyn chiptune band Anamanaguchi, Miku dips and spins and bounces around the stage, singing hit song after hit song, all of them boasting soaring titles like “10,000 Stars” and “World is Mine.” No matter how acrobatic her moves or how incredible her vocal runs, her voice remains smooth and shiny—never cracking, even on the highest notes. When the concert comes to a close, she vaporizes, not to be seen again until the next stop on the tour, where she would perform the exact same set with the exact same superhuman precision.

How is this possible? Because Hatsune Miku, which translates to mean, “the first sound from the future,” isn’t human. She’s not even a robot. She’s a computer-generated hologram projected on a giant glass screen, and her entire performance is painstakingly pre-programmed—none of which bothered the fans who shelled out something in the neighborhood of $75 to see her “live.” They knew Miku wasn’t real—that’s why they came in the first place. Hatsune Miku—pop superstar in Japan, face of Google and Toyota, Givenchy muse, Pharrell collaborator, opening act for Lady Gaga, and fashion magazine cover girl—is software. Hatsune Miku is a Vocaloid.

If this sounds crazy to you, you’re not alone. It sounded crazy to me when my 13-year-old cousin, who attended the Warfield concert, gushed about her love for vocaloids, a musical phenomenon that was, until that moment, completely off my radar. A teenage girl’s obsession with a simulated pop idol became my portal into the fascinating, futuristic, and often confounding world of Vocaloids: the synthesized vocal singing software first developed by Yamaha in 2003 that has, quite literally, taken on a life of its own. Like the synthesized violins and harpsichords on GarageBand, vocaloid software offers to its users a sort of “singer in a box.” A human voice actress or actor sings various phonemes, which are then used to create a voice bank of synthesized sounds that can be downloaded by anyone with a laptop for less than $200. Once installed, the vocaloid is programmable via a piano-roll interface, with parameters available for adjusting pitch, vibrato, tone, clarity and other vocal characteristics—a process known as “tuning” the Vocaloid. Tuning is the reason Hatsune Miku can sound completely different based on who is programming her; the way she sounded at the Warfield is just one of many iterations. Her creators at Sapporo, Japan-based music technology company Crypton Future Media estimate that there are over 100,000 songs featuring Miku’s voice in one form or another. And she’s not the only one. Though her face is the most recognizable, Miku is only one of over 70 Vocaloids that have been developed in the past decade.

Much like Apple’s Siri, Amazon’s Alexa, and 2001: A Space Odyssey’s Hal 9000, each vocaloid program has a name and an identity. Vocaloids are assigned an anime-like character designed to reflect the qualities of its voice bank. In addition to Miku, Crypton Future Media, using the Vocaloid engine licensed from Yamaha, is also responsible for Megurine Luka, a pink-haired female with a huskier tone than Miku, and Kagamine Rin and her male counterpart, Kagamine Len. Another company, Internet Co. Ltd., is the creator of Megpoid GUMI, who sports a green bob and stronger pipes than her sisters. And these are only the Japanese-language Vocaloids—though some of them have since had English-language voice banks developed in response to growing demands from the Western market. Because of that increasing interest, Zero-G has introduced the English-speaking Avanna, Cyber Diva, and, more recently Daina and Dex, a male Vocaloid.

Vocaloids are a longstanding phenomenon in Japan, where records featuring Miku have made regular appearances on the weekly Oricon music charts. In the Western world, however, Vocaloids are still something of an underground phenomenon, operating in a way not dissimilar to any other DIY music community. The software’s most passionate users are are a tight-knit, almost entirely online-based community of amateur producers, most of them in their teens and early 20s, using Vocaloid software in their bedrooms and dorm rooms to compose thousands of hours of original music that they then upload to Bandcamp or YouTube or Niconico, the Japanese equivalent of YouTube. They share the results on message boards like VocaloidOtaku and Vocaloid Amino, querying other users for tips on using the software, looking for artists to create accompanying visual media and offering their own skills in exchange.

These creators have special relationships with their Vocaloids, fondly referring to the software as “she” or “him” in conversation. They write concept records with cinematic themes in which their Vocaloids have starring roles, most of them accompanied by music videos created by visual artists in the community. They work hard tuning the Vocaloids to make them sound as understandable as possible—a challenge, considering the program has difficulty with English pronunciation, specifically with transient sounds such as consonants and the many different ways vowels are expressed depending on context—thus the addition of subtitled music videos for many of their songs.

The software’s reception in the American music industry has been historically less lively. Vocaloid’s highest-profile, non-Miku appearance in American music to date has been courtesy of EDM artist Porter Robinson, who dueted with English-language Vocaloid Avanna on “Sad Machine,” a track from his 2014 album Worlds—an appearance that the community has taken as a sign that the time is ripe for Western Vocaloid producers to step up their game. Producer nostraightanswer (also known as Kenji-B) could be summing up the dreams of these amateur producers when he says his ultimate goal is to, “start bridging the gap between Vocaloid and mainstream. Miku Expo and [Porter Robinson’s] ‘Sad Machine’ have done wonders in bridging a decent amount of that gap. I would like to contribute to that, potentially, by making music that appeals both to mainstream listeners and Vocaloid listeners.”

Perfect Pitch

But there’s a catch: to the new listener vocaloids may sound, well, a little unsettling. At their best they simply sound artificial—not much stranger than a voice that’s been auto-tuned to the point of plasticity. At their worst, they sound unnatural and jarring, inhuman to a point where it’s difficult to listen to them without feeling a bit inhuman yourself, bringing up philosophical questions about what essential qualities the synthesized voice is currently lacking that would allow them to communicate in a way that sounds, if not entirely human, at least less digitized. On the most basic level, “[the human voice] should have a certain amount of impurity in it,” explains explains composer and sound artist Maija Hynninen, who experiments with the blending of human vocals and live electronics in her music. “The vocal chords are not merely producing clean pitches—there’s a ratio of noise in the sound as well.”

That imperfect human quality is something that vocaloids, with their unfailingly clean and evenly-pitched sound, cannot replicate. It is still very difficult to get Vocaloids to growl or scream convincingly, but they can hold notes for minutes without taking a breath, form words faster than the human tongue could ever manage, and make vocal leaps that would be impossible even for the Mariah Careys of the world. It’s those qualities that are part of Vocaloids’ appeal for producer Ghost Data and others like him. “If we had a perfect vocal synthesizer software,” he says, “it would not be a phenomenon. I think that the fact that we can’t fully make it sound human is what makes it appealing.”

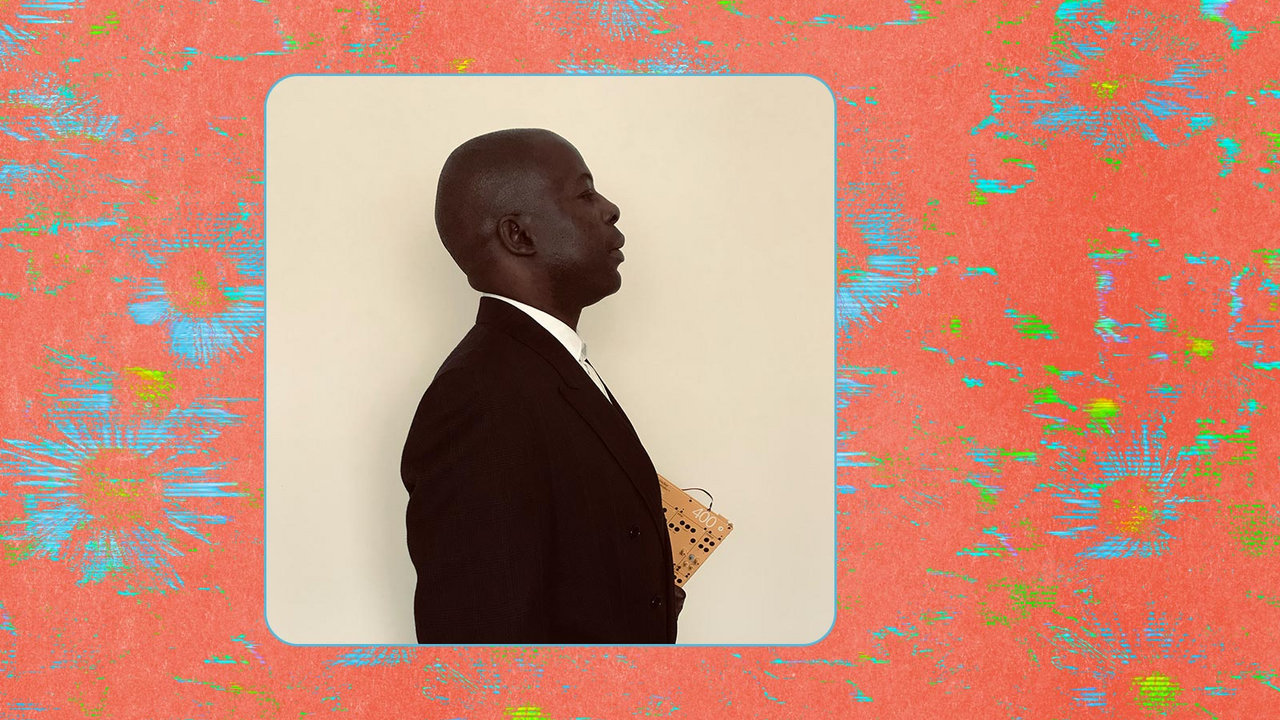

Producer ODDEEO aims for a mix of robotic and human sounds with his Vocaloids. Fittingly, one of ODDEEO’s chief successes is Sunset Memories, an ‘80s-influenced concept record (and accompanying music video) starring Megpoid GUMI as a human girl in love with a robot. He’s well-known for his meticulous tuning abilities, so much so that other producers often send him their tracks for clean-up. He admits the software has “a long way to go” before it sounds even remotely human, but stresses that the way a listener reacts to Vocaloids is a question of both familiarity—in other words, the longer you listen, the less artificial it will sound—and each producer’s individual tuning skill.

“Vocaloids do start off as computer-generated, so [the realism] comes down to the producer themselves as to how they tune the Vocaloid and, afterwards, the post-processing, how you compress the Vocaloid,” he says. “Some people can manage to do it correctly. Mitchie M, for example, has one of the best sounding Hatsune Miku’s. I couldn’t tell the difference between a normal Japanese singer, the way he tunes her.”

The idea of a producer being able to “tune” a vocalist like an instrument is striking, and is central to understanding why Vocaloid is unnerving in its artificiality in a way that other synthesized instruments are not. “We are biologically wired to hear voices as individually distinct,” says composer, vocalist, sound artist, and UC Berkeley Associate Professor of Music Ken Ueno. “The human vocal mechanism is capable of many and more complex sounds than most instruments. Any time you create an image or a machine that models human behavior, we are prone to receiving it through the uncanny valley.”

Ueno is referring to the hypothesis put forth by Japanese roboticist Masahiro Mori which states that the more human a robot looks or acts, the more likely it is to elicit a negative reaction from people. I experience the uncanny valley first hand when speaking with Azureflux, a vocaloid producer who runs the record label Vocallective, which releases music exclusively featuring Vocaloids, including ODDEEO’s Sunset Memories. Although he has formally studied music composition and “shouldn’t like this stuff,” he finds vocaloid software completely fascinating. For him, synthesized vocals represent “the final frontier in music technology. You can synthesize a bass, or an electric guitar and, to an untrained ear, they would sound absolutely real. But vocals are finicky. Everyone’s been listening to voices since they were born and can tell a synthetic one from a real one.”

Azureflux Skypes me one of his musical projects, Beam Morrison, a collaboration with fellow producer furez in which Vocaloids sing blues and rock standards. I have an immediate and viscerally negative reaction to the cover of the White Stripes “Offend in Every Way”—it brings up memories of the Futurama episode where Art Garfunkel duets with a robot Paul Simon on “Scarborough Fair.” I ask him why he would try to take on the blues, which is in some ways the most human of all genres because of the way the voice is used as a particularly direct expression of emotion. “It has to start somewhere,” he says. “People couldn’t imagine Vocaloids growling, but we did it,” he says. “One day we’ll synthesize the blues, just you wait.” He does, however, cop to Ueno’s chief concern, admitting that the project is “very uncanny valley.”

The Uncanny Valley

Bridging the uncanny valley has been a problem for Vocaloids since their introduction. If a Vocaloid were ever to truly replicate a human voice, Ueno says, it would have to be that of a specific person. “An individual voice, when we listen to it, also stands for the uniqueness of each of us, as a listener. Once that’s gone, then we will all be rendered anonymous—a stream of zeros and ones like any other.” But Crypton Future Media found a way to at least partially alleviate this disconnect through the creation of characters like Hatsune Miku.

Although the first generation of Vocaloids did come with names and distinct vocal characteristics—notably Zero-G’s Lola and Leon, two English-language Vocaloids meant to sound like soul singers—they were not modeled on specific, recognizable voices (the singers who provided their voice banks remain unknown) and they were marketed mainly towards professional musicians. Crypton Future Media also created character designs for two Yamaha-developed Vocaloids named KAITO and MEIKO with the intention of selling them to a wider audience, but they weren’t assigned “personas” per se, and their designs were not specifically geared towards any existing fandom. Sales were slow, despite early critics and producers taking an interest in the technology’s potential to replace human vocalists with programmable software. It wasn’t until the Vocaloid 2 engine (each progressive engine update is marked with a number; we are currently on Vocaloid 4) and the introduction of Crypton Future Media’s character series in 2007—specifically the character of Hatsune Miku—that Vocaloids really took off.

The addition of discernible characters with individualized back stories to the synthesized voices proved to be the missing link, especially when those characters were explicitly designed to connect with anime fans in both Japan and the United States (Miku’s voice bank was provided by anime voice actress Saki Fujita), many of whom first discovered Vocaloids via fan-made music videos on YouTube, or while spelunking in the depths of Niconico.

Miku, Luka, GUMI and each subsequent new design were absorbed easily into existing fandoms and quickly inspired new ones. The members of the nascent Vocaloid community immediately set about creating their own versions of the characters, all legally-sanctioned under Crypton Future Media’s Creative Commons License.

“The awe-inspiring aspect of Vocaloid, when I discovered its true nature, was the concept that all the content a Vocaloid artist performed was created by hundreds, maybe thousands of other people,” says nostraightanswer. “With Hatsune Miku, for example, all of her songs were created by hundreds of people, but so were all of her looks—not just the music, but the visuals. I couldn’t think of anything at the time that worked quite like that.”

Ghost Data agrees, “Every producer is an individual, and it’s very clear how individual they are in how they use their Vocaloids. Producers have used Miku for death metal songs, for kawaii songs, for horror songs… They see Hatsune Miku as a pop idol, a high school student, as a mom, as an office lady. She literally can be anything.”

Which is another key aspect of Vocaloids’ appeal—the fact that they can give budding producers, many of whom have no background in music composition, an intermediary with which to explore their own feelings of anxiety, sexuality, and desire at a safe remove.

“Music and art help me to cope with reality,” says says Strawberry Hospital, a producer who mixes Vocaloid with elements of shoegaze and dubstep. “I try very hard to convey my emotions through what I write. Working with Vocaloid feels like I am allowing the characters to take on my personality and tell the story for me. They act as my voice when it’s hard for me to speak up.”

“I like to call Vocaloid music, ‘Music production for introverts,’ says Aki Glancy, who records under the name EmpathP and releases projects through her own Empathy Studios label. “Music for people who want to share, but don’t want to be in the spotlight.” Glancy is a longtime booster of American Vocaloid producers. She started experimenting with the software around 2008, just after the release of the Vocaloid 2 engine. At the age of 28, she is one of the scene’s older producers (some members of the community refer to her as “Momma Aki.”) Glancy was the organizer of VOCAMERICA, the first all-American Vocaloid concert, which was entirely funded by a successful Kickstarter campaign. She also designed the character for Avanna, and provided the vocals used for Daina’s voice bank.

For Glancy, Vocaloids offer her a sense of control that she could never achieve with a human vocalist. “I could hire a very talented musician and tell them exactly how I want them to sing, but in the end, I don’t control their voice. They have all the control when it comes to power, pronunciation, etc. With Vocaloid, every inflection, every vibrato, everything that makes that voice unique is something I have control over,” she says. “And because a Vocaloid doesn’t have an existence, the ‘life’ and ‘emotions’ it sings about are that much more potent. You know that they have no life outside what they are singing about. That sorrow or that happiness they feel in the song is all they feel.”

But Does It Have a Soul?

Appreciating Vocaloid as a vocalist specifically because it can’t generate its own emotions sounds a bit cold-blooded, and other Vocaloid producers bristle at the suggestion that their music has no “soul,” citing Kraftwerk, Bjork, and even the Gorillaz as examples of artists who have used electronics or character designs in their work without being accused of creating unemotional music.

“For someone to say that music has no ‘soul’ because of the vocals just doesn’t connect with the artists’ music, I think,” says producer Xtraspicy, who attempted to tune Hatsune Miku to sound as human as possible for a jazzy love song called “Forever.” “People connect with auto-tuned, automated, beat-detective, quantized, heavily-processed computer music on a daily basis, so I don’t think it’s a fair statement. It was Kraftwerk themselves that said that, though their bodies are robotic, that their hearts and souls are human.” (To be fair, Kraftwerk are, in fact, human.)

“There are real people working behind the vocaloids, says Azureflux, he of the robotic blues album. “It takes a lot of passion and soul to develop the program, to make it sing and finally to present it with the rest of the music. We try to put the “soul” in the Vocaloid by editing vibratos, legatos, sibilance, phoneme speed and whatever parameters make it become more real.”

This, however, raises a few basic questions: can a synthetic voice programmed to mimic the sound of human emotion actually communicate emotion? And is emotion that exists only in ones and zeroes—even if it has been created by a human—truly an emotion at all? Can the power of the human voice truly be reduced to vibrato, legato, phenome speed, and any number of other parameters? Ueno says no: “The most powerful vocal performances transcend mere musical and parametric accuracy. For example, there’s a moment at the end of Judy Garland’s performance of ‘Come Rain or Shine’ on her Judy at Carnegie Hall album, when she goes for a high note and misses it. But at that moment, she garners the strongest response from her audience. That kind of real communication would be hard for a digital proxy to achieve, since the vocaloid producers, at present, don’t seem to be sensitive to it. That’s probably the biggest hurdle.”

Finding the Human Element

For most Vocaloid producers, however, that question is moot. They know that Vocaloids can’t perfectly imitate a human voice, so the more sensitive among them attempt to find a middle ground that is unique to the software itself—neither totally human nor totally robotic. That’s the tactic taken by Circus-P, among the more famous of the Vocaloid producers. His song “10,000 Stars,” a punchy EDM track, was selected by Crypton Future Media for use in the Miku Expo. Her hologram performed a pre-recorded version of the song “live” on every stop. Like ODDEEO, Circus-P is often called on to tune other people’s Vocaloids for them, and often purposefully tunes the program incorrectly to achieve a greater sense of humanity while not sacrificing the music’s mechanized qualities.

“I want the vocal to sound like it has ‘feeling’ in it, but not necessarily a natural human feeling,” he says. “Vocaloid is really unique because you can do things with it that you couldn’t normally do with a human voice. There are some Vocaloids that are recorded specifically to be easily understood, like Cyber Diva. She is so clear and pronounced that it’s almost off-putting. So sometimes I purposely edit her to slur her words a bit.”

Another way that producers give Vocaloids a human element is by having the Vocaloid persona play a character within narrative-driven concept records that tell overarching emotional stories, many of them set in a future world where robots and humans co-exist. Ghost Data used Megurine Luka on his record The Shepherdess, a story about a robot who achieves immortality in a post-apocalyptic future word. For him, vocaloids were a natural choice for “a story that revolves around that what it means to be human, the morality of choice and decision, and trying to understand that the world isn’t black or white. The grey area is what makes us human. I wanted to use Vocaloids to portray that distinction, that melding of blood and metal together.”

Yet most the most successful amateur Vocaloid producer doesn’t deal in concept records. Cien Miller, who created Vocaloid music under the name Crusher-p, wrote a song about self-reflection called “Echo,” using a highly-pitched, frenetically paced Megpoid GUMI as the vocalist. A video was created for the song, but it isn’t the typical of the genre. Instead of GUMI acting out a role, as she does in ODDEEO’s video for Sunset Memories’ “>This_feeling_is_a_cliché”, the video for “Echo” features an unmoving female figure with a television for a head, its static-filled screen flashing anxious, evocative lyrics: “My enemy’s invisible/I don’t know how to fight/ The trembling fear is more than I can take/When I’m up against the echo in the mirror.” It has been streamed over 14 million times on YouTube, appeared on a Warner Music Japan compilation album, and is available in Japanese Karaoke bars. A “light novel”—a Japanese graphic novel—was even created around it, and has achieved such success it is now being considered for translation into other languages. Divorced entirely from the GUMI persona, the song connected with listeners on a more emotional level.

“Because of how it lacked the storytelling, a lot of people took time to try to decipher it,” Miller muses when asked why she believes the song inspired such a big response. “When I told them there was no real answer for what it was about, they interpreted in a way that could relate to them.”

Miller, 21 years old, currently makes a living via commissioned songs and illustrations, something she admits would not be possible without the success of she achieved as Vocaloid producer Crusher-p (although she has since moved away from the community). She, too, takes issue with the idea that the Vocaloid is an inferior substitute for the human voice, but she also expresses concern that the community’s adherence to each Vocaloid’s persona is a detriment, admitting that “it’s more of a toy than a tool at this point. I think that if Vocaloids were marketed as more than just a virtual diva lead singer, it could grow significantly.”

Ueno echoes Miller’s thoughts: “[The producers] are still treating [the Vocaloid] like a singer, with a foregrounded melody setting text. Those two factors trigger our familiar modes of listening in terms of the pheno song—listening to the text—and geno song—listening to the body. If the music were more radical and orchestrated, the new context would facilitate the Vocaloid performance within a defamiliarized context. Then, we could learn to listen to it on its own unique terms, divorced from history. Perhaps letting go of the pressure to humanize the Vocaloid might be a necessary, first, creative step.”

Vocaloids and Identity

Familiarization seems to be key in appreciating Vocaloid in its present form. It is true, as ODDEEO noted, that the more one listens to Vocaloids, the less strange they become. After many weeks of listening to Vocaloid music, my ears became accustomed to their sound, and I found myself understanding their lyrics more intuitively. I’m less put off by the artificiality and more intrigued by the ways producers manipulate the synthesized voices in decidedly non-human ways. Even so, Strawberry Hospital’s use of Vocaloids to fill out her dreamy soundscapes and nostraightanswer’s ambient VOCAtmosphere experiments strike me as more innovative, subtle and modern than thumping electronic pop songs with impossibly plastic vocals.

Then I learn about Jamie Paige. Jamie is a Houston-based transgender synthpop artist who found a home in the Vocaloid community early on when she was first “putting together the pieces of my trans identity.”

“A lot of Vocaloid producers and fans are a part of the LGBT spectrum,” she says. “And I think it’s really obvious why. There’s an impulse among queer people to find ways to overcome, amplify or change their own state of being.”

Paige favors GUMI, whose tomboyish appearance and punky vocals are what she connected with initially, and what she still enjoys today. Her Bandcamp avatar is a mix of GUMI’s design and an idealized version of herself. “The character GUMI started feeling like a persona that I could adopt on the days when my voice filled me with dysphoria, or I needed escapism in darker moments—not to mention that her voice was decidedly more feminine-sounding than I felt like my voice could be at that time,” Paige explains. “Honestly, just in general, being involved in Vocaloid helped me really internalize that it was okay to ‘be feminine,’ and that there were places where someone like me could really belong.”

Her music features an even split between Vocaloid and her own vocals, which are almost always auto-tuned. “I can’t remember the last time I didn’t auto-tune my voice, honestly,” she says. “I could absolutely do without it and sound completely fine if I wanted to, but at this point i just don’t like how my voice sounds without it anymore.”

“I feel like the reason I love using Vocaloid so much is also why auto-tune is so prevalent in my music,” she continues. “There’s something really fascinating and freeing to me about being able to change or even completely circumnavigate my own voice in that way. In the same way that synthesizers and electronic music are at their best when working to their own strengths, Vocaloids are at their best when they aren’t trying to sound human,” she says. “More often than not, the artificiality of Vocaloid vocals can either really compliment the human vocals, or even bring out the disparity in realism in a more positive way.” She points me towards “Love Taste,” an electro funk collaboration with French artist Moe Shop where she created a very artificial, emotionless Vocaloid to contrast with the human vocalist.

“Grand Restore,” from Paige’s Anew, Again, is an infectious chiptune-inflected pop song about looking forward to falling in love. It features chirpy vocals from GUMI, tuned to a level of brightness that’s sweet and appealing. The vocaloid blends seamlessly with both the video game production and Paige’s subtle “oooh da da da” backing vocals. It sounds genuinely happy, and it makes me happy. Though the singing is clearly not human, it doesn’t bother me. There is a sense of shiny optimism that doesn’t seem like it could be replicated by a human vocalist. “Grand Restore” is the kind of song that should only be sung by Vocaloid.

Later, I try to figure out exactly why “Grand Restore” resonated with me so deeply when so much other vocaloid music comes across, as Cien Miller observed, rather toy-like. Perhaps it’s because Paige has always been auto-tuning her own voice, so she is more sensitive to the ways vocaloids can and cannot be used with the present technology. Her songwriting and production skills are certainly far superior to many other producers. But it’s more than that. The answer lies in the way she’s using the vocaloid in the context of her music. Rather than creating distance between herself and her emotions, or keeping the listener at a safe remove, she is using Vocaloid to be truer to herself—to be her true self, adding back in the specific, unquantifiable quality that makes the human voice such a powerful and unparalleled instrument. This is no “representation” of the melding of man and machine, it is the thing itself. By disassociating GUMI from her pre-packaged persona—the marketing magic bullet that made Vocaloids such a phenomenon in the first place—Jamie Paige creates a space for her own individual voice to shine through the machinery. She finds a way to give GUMI a piece of her own soul and, for the length of a pop song, lights a human bridge across the uncanny valley.

—Mariana Timony